Data centres in 2026: Where things are heading

Inference is rising, data centres are diverging, and resources are the prize.

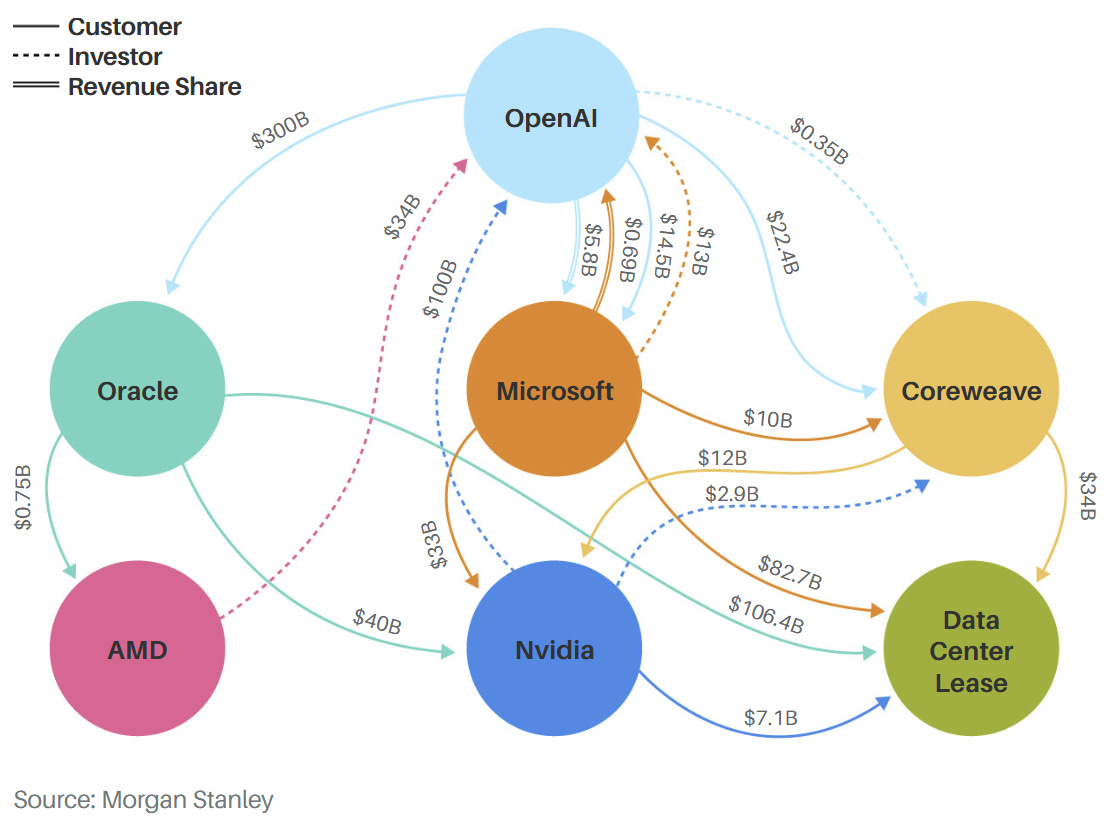

Data centre announcements and developments came fast and furious over the holiday season. Regardless of your stance towards the continued headlong rush towards building up AI infrastructure, the circular financing, and the controversial way GPU depreciation is being taken off the books, there is no doubt that this is still where the money is.

After all, this is the reason why memory makers such as Samsung, SK hynix, and Micron have redirected fab capacity from commodity PC DRAM to high-margin AI memory (HBM). And I’ve heard of at least two hyperscalers ramping up their data centre investments.

Without overthinking it, here are my thoughts about where I see things heading with AI and data centres in 2026.

The rise of AI inference

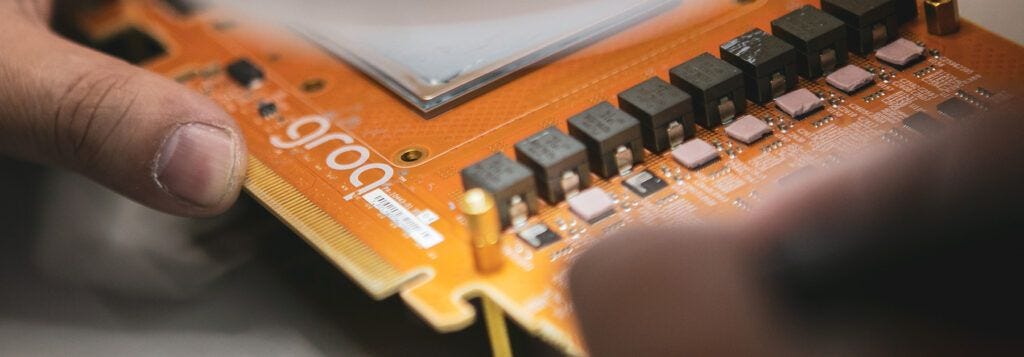

The day before Christmas, Nvidia pulled a surprise acquisition of Groq, in what is the company’s largest recorded deal ever. According to some reports I read, it was closed “very quickly.” Did Nvidia offer a “take it now or forget it” deal to force Groq’s hand after the latter sharply cut its 2025 revenue forecast? And did the delay of its major supply deal with Saudi Arabia play a part? I have a sneaking suspicion they all did.

What is Nvidia doing by acquiring Groq? Some would argue that Nvidia just bought out its most promising competitor. But hearing it from Nvidia CEO Jensen Huang, it is all about AI inference, which apparently already accounts for more than 40% of Nvidia's AI-related revenue and which he predicts will rise sharply.

From this vantage point, it is easy to see why Nvidia is working hard to further strengthen its already formidable stack by co-opting promising AI inference technology, ensuring that it gets its hands on all parts of the market. No matter that enterprises are still struggling with AI use cases; market leader Nvidia has clearly voted with its money.

AI data centres pull further ahead

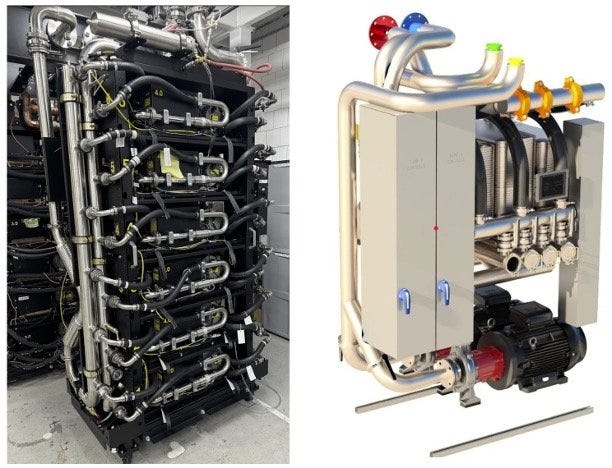

For w.media’s magazine titled “The race to 600kW” that was just published, I had to read up on Nvidia's Vera Rubin platform, which will be released in three broad implementation phases starting this year. The first phase will see NVL72-style systems that will serve as a direct successor to today's cutting-edge GB300 NVL72. Vera Rubin NVL144 will push rack IT power to the 300kW range, while the Rubin Ultra NVL576 will eliminate fans entirely and run at 600kW per rack.

What is my point here? Nvidia’s GB300 NVL72 system today consumes up to 140kW of power per rack and requires data centres designed from the ground up for high-density liquid cooling. But just when operators are finally getting their act together and vendors are rolling parts such as CDUs, Vera Rubin pulls the rug from under everyone’s feet.

Supporting 600kW will necessitate a complete rethinking of power delivery, cooling systems, and physical infrastructure. This includes reinforced floors, the use of DC busways, and powerful new cooling systems that might exist only as prototypes. Crucially, it pulls even further ahead of data centres designed for traditional workloads, essentially forcing operators to build for one or the other. Not both.

When the race comes down to power

Finally, data centres are increasingly devolving into a race for resources. Think about it: assuming practically unlimited access to funds and a determination to outbuild rivals, the only constraint to data centre buildouts is power. And this is how it has played out in the United States, where technology giants have embarked on a race to build ever-larger data centres.

Initially, this meant activating decommissioned nuclear power plants, snapping up choice sites with access to spare power, or building at the boundary of two or more states for greater speed to market. Of late, it is about hoovering up pockets of excess grid capacity and connecting remote AI data centre campuses into an “AI superfactory.”

The situation is more nuanced in the Asia Pacific, given the varying maturity of data centre markets and how data centre investments are a couple of steps behind. But there is a similar pattern of speculative bets and a rush to secure sites with access to energy. In a growing number of cases, this is also driving investments in renewables, based on informal chats I’ve had. I plan to explore this topic more this year.

AI inference is rising. Data centres are diverging. And power is the prize. Whether the circular financing and controversial GPU depreciation signal a bubble or the start of a supercycle, these are the forces that will shape data centres in 2026. It's going to be an interesting year.

A section of this first appeared in the commentary of my free Tech Stories newsletter that goes out every Sunday. This newsletter is a digest of other stories that I wrote in the preceding week. To get it in your inbox, sign up here.