We're all thinking about AI wrong

Stop asking what AI is capable of. Ask what you have to say.

AI is the topic we all like to talk about, mainly because it continues to befuddle us with its jagged brilliance. I mean, it can be phenomenal at some things, yet so terrible at others. And let’s not get started with the hallucinations that some experts say will never be completely eradicated.

Moreover, each time we think we have it figured out, someone comes up with innovative new ways to use it, or another wave of models is released that demonstrate new capabilities or emergent properties.

But perhaps we are all getting it wrong. Maybe what really matters is not what AI can or cannot do. It isn’t about taking a stance between entrusting it with everything or vilifying it and refusing to go anywhere near a chat prompt. Instead of putting AI into a box, we need to see it for what it is: a digital shed packed with a plethora of tools, ready to be shaped by how we choose to use it - or misuse it.

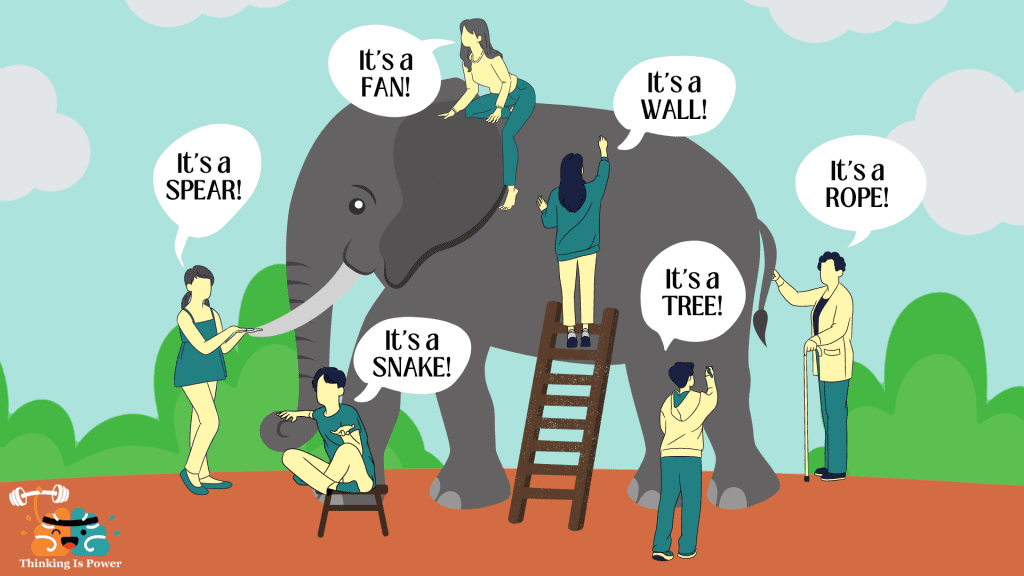

The blind men and AI

In the classic blind men and the elephant parable, each person touches a different part of the animal and draws wildly different conclusions. None of them are wrong, yet they all completely miss the bigger picture.

Each time I write about the use of generative AI in writing, I’ve received wildly different responses. There are some who condemn its use with gusto, while others assert that good AI outcomes lie with the thought process or the presence of original ideas.

A third group would challenge me on what they perceive as a personal attack on how they use AI. What does it matter, they ask, as long as they take ownership of the final output? It is worth noting that these respondents are seasoned professionals, some of whom are successful business leaders with regional or country-level remits.

I’ll be honest. It took me a long time to figure this out. Now I will say: none of them are wrong. But everyone is also missing something. Those who condemn AI outright are closing themselves off to new tools. Those who emphasize the thought process have a point, but it doesn’t explain why so much AI-written content is still so bad. And those in the ownership camp ignore that owning mediocre output is still mediocre.

And it’s not even our fault. After all, AI is so inherently groundbreaking, so radically different, and continually improving - that we really have no frame of reference and are all still trying to make sense of it.

The linguistic camouflage

Before I go on, I want to say that I believe generative AI will change the world as we know it. However, if you read my posts regularly, you would surely have known about my frustration with AI-generated content on social media. My beef isn’t with the large language model (LLM) itself. It is with the use of AI to effectively beautify poor or shallow writing, making it appear insightful until you actually read it.

But read closely and the cracks show up quickly. What’s worse is how AI excels at synthetic coherence that makes even erroneous arguments read well. I do loathe wasting precious time on a piece of content, only to discover upon finishing it that it’s nothing more than AI slop.

This is the antithesis of why I write, which is to craft compelling, easy-to-understand stories that offer genuine insights. Is it possible to generate exceptional content using AI? I don’t doubt it. But doing that takes significant effort and skill in working with AI, from my personal experience.

This is because today’s AI has a tendency to skew towards the most common patterns, making everything sound the same. A strong writer can make it work with enough effort, though at some point one wonders if they’d be better off just writing it by hand. It is for this reason that I think the best content will continue to be human written for a long time to come. I’m not arguing against using AI. I’m merely saying that its use can’t replace the craftsmanship and discernment needed to frame and write a good story.

The practical path

When we look beyond the grandiose and frankly unrealistic claims being made about AI, I genuinely feel that the technology will help improve work. In my own ongoing journey since the public release of ChatGPT in November 2022, I’ve stumbled on various ways that work for me.

Here are some examples of how AI has helped me.

Last week, I was invited to a media briefing at Cloudflare’s Singapore office. With my handwritten notes on my Remarkable and the audio transcript with help from AI (Otter), I was able to quickly locate relevant pointers for my post. This cut the usual writing time by a third - allowing me to post it in time to attend a second event that afternoon.

I have relied on various leading AI models such as Gemini Pro and Claude Opus to critique some of my long-form content that I write online or for clients. I don’t accept every recommendation, but I dare say it’s allowed me to produce significantly better outputs without the benefit of a human copyeditor.

AI has also proved invaluable in copywriting. When crafting a messaging framework, for instance, getting key descriptions and headers right are absolutely vital. This is where AI services such as ChatGPT and Perplexity have helped me save a tremendous amount of time as I craft and fine-tune them.

Which leads me to my point for today’s piece. I believe there is a segment of people who are quietly dabbling with AI to improve and enhance their work. They are not looking for prompt packs or quick-to-obsolete techniques but are surgically using AI to amplify the quality and volume of their output.

A calculator doesn’t make someone a mathematician; a spell-checker doesn’t make someone a writer. AI amplifies existing capability rather than replacing it. When it comes to writing, the question isn’t whether to use AI. It’s whether you have something to say.

A version of this first appeared in the commentary of my free Tech Stories newsletter that goes out every Sunday. This newsletter comes with a digest of other stories that I wrote in the preceding week. To get it in your inbox, sign up here.